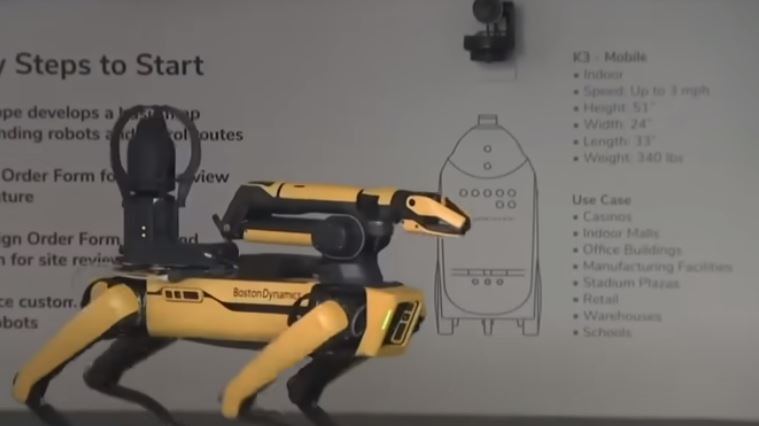

Fast forward 17 years to 2019, and this type of advance knowledge has moved from the stuff of science fiction movies to reality.

As reported by Bloomberg Quint, Japanese startup company Vaak has developed artificial intelligence software which uses footage captured on security cameras to identify potential shoplifters by zeroing in on suspicious behaviors like fidgeting and restlessness.

Computer algorithms spot these and other behaviors and report them to staff members via a smartphone app. This gives the employees the opportunity to approach the “suspicious” person and ask them if they need help. The theory is that by approaching potential thieves in this way, many shoplifters will be put off and leave the store without stealing anything.

This technology may sound proactive and safe at first glance, but deeper reflection raises many questions. For example, what are the risks of staff members approaching potentially armed thieves who might react violently? On the other hand, might over-zealous employees who themselves are armed inadvertently harm an innocent person? Since the algorithms that detect supposedly suspicious behaviors are based on biased and often racist crime data, could minority groups be unfairly targeted? And what if someone is just naturally nervous and fidgety, or even high on drugs? (Related: Artificial Intelligence 'more dangerous than nukes,' warns technology pioneer Elon Musk.)

What the studies show about AI behavior-detection systems

As reported by the U.K.’s Daily Mail, Vaak is far from the only tech company to develop this type of software.

Secretive trials on similar programs have reportedly been undertaken in China and the United States. In addition, Silicon Valley startup Palantir partnered with the New Orleans Police Department last year to test a system which would predict both who would be most likely to commit a crime and where such crimes might take place.

Studies indicate, however, that these types of programs are based on flawed and biased information. (Related: Welcome to your jobless future … Artificial intelligence will replace half of all jobs in the next decade.)

The Mail reported:

An MIT study published this past fall found that many popular AI systems exhibit racist and sexist leanings.

Researchers have urged others to use better data to ensure biases are eliminated. …

Chicago's police department uses a notorious 'heat list,' which is an algorithm-generated list that singles out people who are most likely to be involved in a shooting.

However, many experts have identified issues with Chicago's heat list.

The government-funded RAND Corporation published a report saying that the heat list wasn't nearly as effective as a standard wanted list.

It could also encourage a new form of profiling that draws unnecessary police attention to people.

Another academic study found that the heat list can have a 'disparate impact' on poor communities of color.

While some may suggest that the way to eliminate these issues is simply to create better algorithms, Ph.D. student Irene Chen notes that any algorithm can ever only be as good as the data it’s based on. Before programs that use these algorithms can be relied upon, therefore, much better, unbiased data that is not slanted against a certain minority or income group would need to be developed.

And that isn’t likely to happen, is it? Learn more about these types of futuristic scientific developments at Science.news.

Sources for this article include:

Please contact us for more information.